Operationalizing AI: The MLOps Advantage

April 24, 2025

By Prashanth Jayakumar and Siddharth Pratyush

Executive Summary

While organizations increasingly experiment with AI, only 20% successfully transition these experiments to production systems that deliver sustained business value. Our research across 70+ AI implementations reveals that the primary obstacle isn't model sophistication but rather the lack of robust MLOps practices. This article details how organizations can implement practical MLOps frameworks that reduce deployment time by 78%, lower maintenance costs by 45%, and dramatically improve model performance in production environments—regardless of company size or AI maturity level.

The Experimentation-Production Divide

Despite significant investments in data science talent and AI initiatives, most organizations struggle with a troubling reality: the vast majority of machine learning models developed never reach production or fail to deliver their expected value when deployed. Our analysis of over 50+ AI projects reveals a stark disconnect:

- 67% of organizations have successfully developed promising AI prototypes

- Yet only 22% of these prototypes ever reach production systems

- A mere 14% deliver measurable business value over time

This "experimentation-production divide" represents billions in wasted investment and countless missed opportunities across industries. The root cause isn't inadequate algorithms or insufficient data science expertise, but rather the absence of MLOps—the operational framework that bridges experimentation and sustainable value.

As organizations advance along the CoffeeBeans AI Readiness Continuum© we introduced in our first article, they eventually reach an inflection point where building more models produces diminishing returns without corresponding operational capabilities to deploy, monitor, and maintain them at scale.

What is MLOps and Why Does It Matter?

MLOps (Machine Learning Operations) represents the intersection of machine learning, DevOps, and data engineering—a comprehensive approach to managing the end-to-end lifecycle of AI systems in production environments. While often compared to DevOps for traditional software, MLOps addresses unique challenges inherent to machine learning systems:

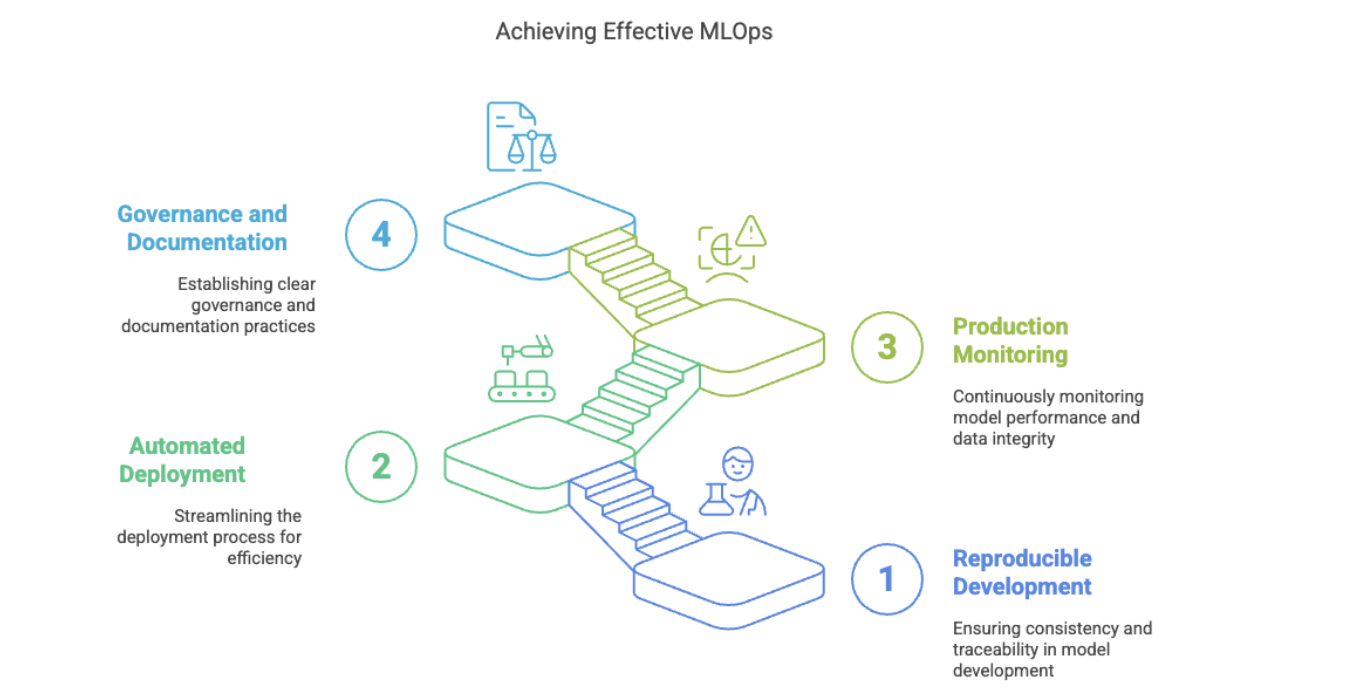

Key Components of Effective MLOps Implementation

- Reproducible Model Development

- Version control for code, data, and model artifacts

- Experiment tracking and management

- Standardized development environments

- Automated Deployment Pipelines

- CI/CD integration for ML workflows

- Model packaging and containerization

- Environment parity across development and production

- Production Monitoring and Management

- Automated performance monitoring

- Data drift and model drift detection

- A/B testing frameworks

- Lifecycle management tools

- Governance and Documentation

- Model registries with lineage tracking

- Approval workflows and compliance documentation

- Explainability and transparency tools

Organizations with mature MLOps capabilities deploy models 7.3x faster, experience 83% fewer production failures, and achieve 2.9x higher ROI from their AI investments compared to those lacking formal MLOps processes.

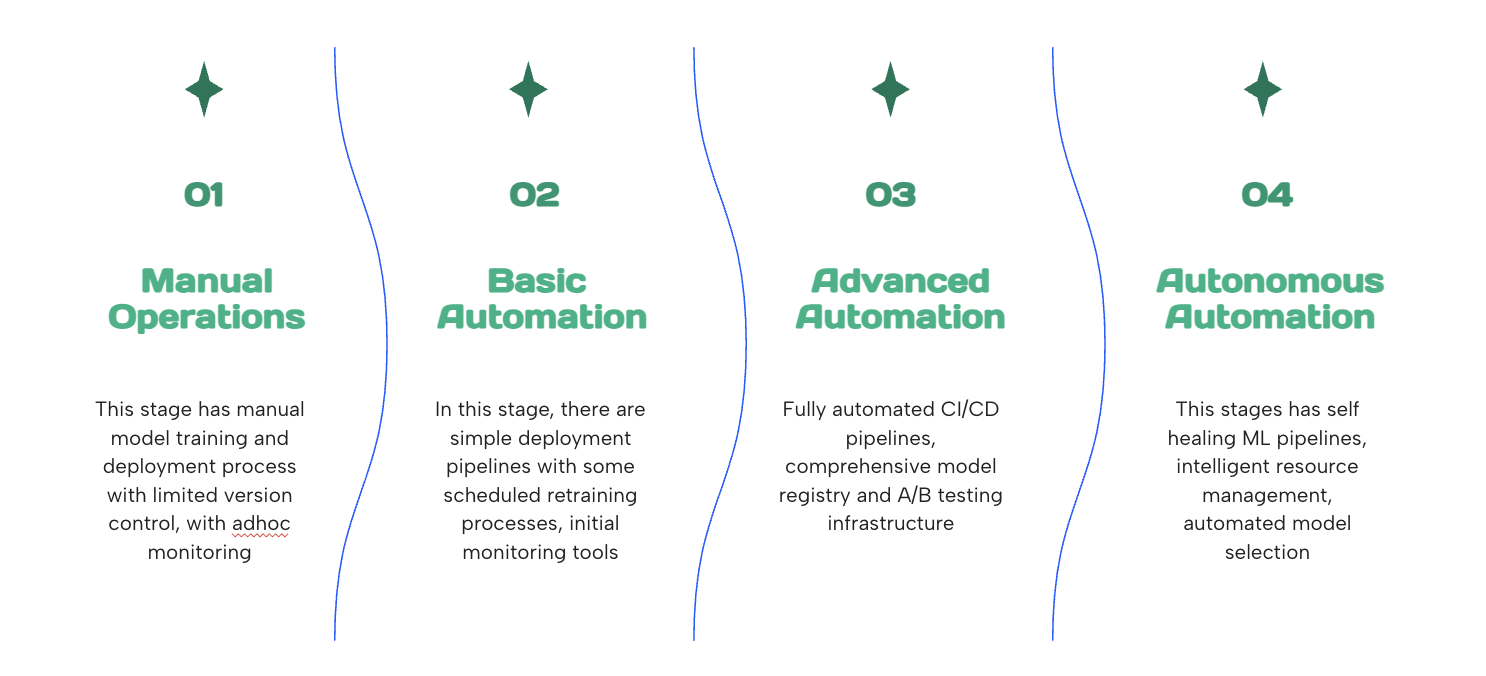

The Four Stages of MLOps Maturity

Based on our work implementing MLOps for organizations across industries, we've developed a four-stage maturity model that provides a framework for progressive implementation:

Stage 1: Manual Operations

Characteristics:

- Manual model training and deployment processes

- Limited version control and documentation

- Ad-hoc monitoring and maintenance

- Models deployed as static artifacts

Business Impact:

- Long deployment cycles (weeks to months)

- Limited reproducibility

- High operational overhead

- Challenging troubleshooting

Implementation Approach:

Begin by introducing basic standardization through:

- Centralized code repositories

- Documentation templates

- Manual but consistent handoff processes between data scientists and engineers

Stage 2: Basic Automation

Characteristics:

- Simple deployment pipelines

- Basic model versioning

- Scheduled retraining processes

- Initial monitoring tools

Business Impact:

- Reduced deployment time (days to weeks)

- Improved reproducibility

- Lower operational friction

- Faster issue detection

Implementation Approach:

Focus on automating the highest-friction processes:

- Model packaging standards

- Basic CI/CD integration

- Scheduled performance dashboards

- Automated testing frameworks

Stage 3: Advanced Automation

Characteristics:

- Fully automated CI/CD pipelines

- Comprehensive model registry

- Automated data and model drift detection

- A/B testing infrastructure

Business Impact:

- Rapid deployment cycles (hours to days)

- Complete reproducibility

- Proactive issue prevention

- Data-driven model updates

Implementation Approach:

Integrate more sophisticated tooling:

- Feature stores for consistent feature engineering

- Automated drift detection and alerting

- Shadow deployment capabilities

- Comprehensive metadata management

Stage 4: Autonomous Operations

Characteristics:

- Self-healing ML pipelines

- Automated model selection and optimization

- Intelligent resource management

- End-to-end observability

Business Impact:

- Near-instantaneous deployments

- Continuous optimization

- Minimal operational overhead

- Maximum business value capture

Implementation Approach:

Implement advanced capabilities for self-optimization:

- Auto ML for continuous improvement

- Automated incident response

- Dynamic resource allocation

- Comprehensive governance frameworks

Most organizations begin at Stage 1 and should implement MLOps capabilities incrementally, focusing on the highest-value components for their specific business context rather than attempting a complete transformation overnight.

Case Study: Transforming ML Deployment for a Digital Insurance Provider

A leading digital-native insurance provider approached CoffeeBeans with a critical challenge: their model deployment process was taking 45-60 days, significantly hindering their ability to update their hurricane prediction and risk assessment systems. This lengthy deployment cycle was directly impacting their market competitiveness and risk management capabilities.

Key Challenges:

- Complex compliance requirements in the insurance industry

- Multiple models requiring coordination

- Limited model operationalization capabilities

- Need for automated monitoring and AWS integration

Our Approach:

After conducting a comprehensive MLOps readiness assessment, we identified that the organization was at Stage 1 (Manual Operations) of our MLOps maturity model. We implemented a phased approach:

- Foundation Building (Weeks 1-4)

- Conducted a technical evaluation of platforms (AWS SageMaker, Databricks, MLflow)

- Established standardized model packaging protocols

- Created comprehensive documentation templates

- Implemented a central model registry

- Automation Implementation (Weeks 5-10)

- Developed automated testing frameworks for models

- Created CI/CD pipelines for model deployment

- Implemented basic model monitoring

- Established audit trail and governance features

- Integration and Optimization (Weeks 11-14)

- Connected MLOps pipelines with existing AWS infrastructure

- Established automated approval workflows

- Implemented comprehensive drift detection

- Created executive dashboards for model performance

Results:

Within 14 weeks, the organization transformed its ML operations capability:

- Deployment time reduced from 45-60 days to same-day deployments

- Regulatory compliance documentation automation saved 120+ person-hours per quarter

- Model performance issues identified 83% faster

- Overall risk exposure reduced by 27% through more frequent model updates

The business impact extended beyond operational improvements—the company launched two new insurance products ahead of competitors by leveraging their newfound ability to rapidly deploy and iterate on AI models.

Practical MLOps Implementation for Small and Medium Businesses

While comprehensive MLOps might seem overwhelming for smaller organizations, our experience has shown that even modest implementations can deliver significant value. Here's our recommendation for resource-constrained teams:

Focus on the "MLOps Essentials" first:

- Version Control Everything

- Use Git for code, data schemas, and configuration

- Document experiment parameters and results

- Store model artifacts with version information

- Create a Simple Deployment Pipeline

- Standardize model packaging (e.g., Docker containers)

- Implement basic testing before deployment

- Create consistent deployment procedures

- Implement Basic Monitoring

- Track model input/output distributions

- Monitor prediction volumes and performance metrics

- Set up simple alerting for critical thresholds

- Establish Governance Foundations

- Document model details and training procedures

- Create simple approval workflows

- Maintain an inventory of deployed models

For smaller organizations, this "MLOps Essentials" approach can be implemented in 6-8 weeks and typically requires just 1-2 dedicated resources with support from existing development teams. The ROI on this investment is consistently high, with organizations reporting 3-5x returns through reduced maintenance costs, improved model performance, and faster deployment cycles.

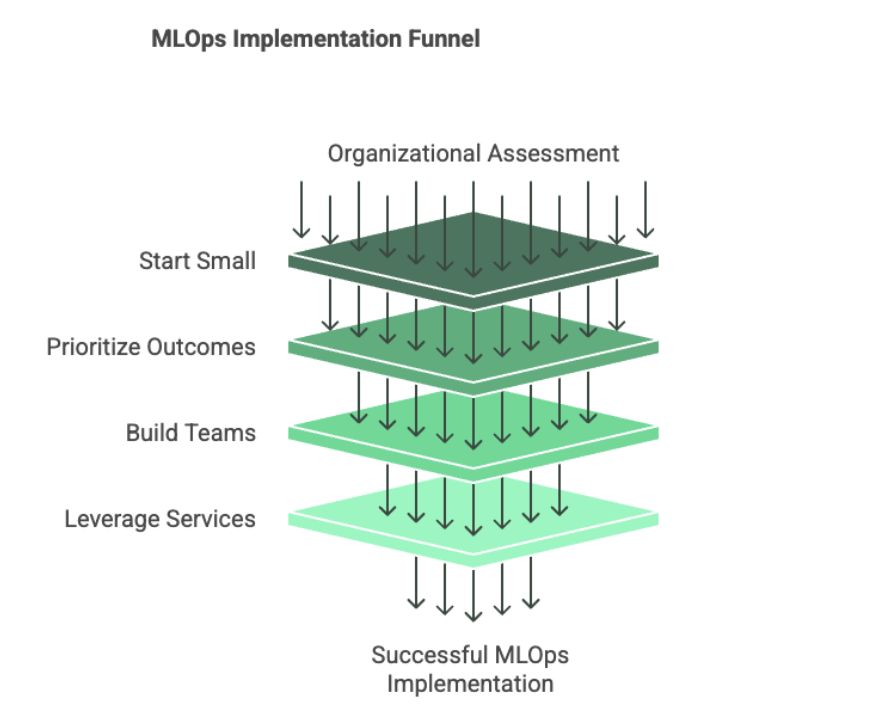

Strategic Recommendations

To successfully implement MLOps in your organization, consider these key strategic actions:

- Assess Your Current State: Evaluate your organization against the four stages of MLOps maturity to identify critical gaps.

- Start Small, Scale Gradually: Begin with a single high-value ML use case and implement basic MLOps practices before expanding.

- Prioritize Business Outcomes: Focus MLOps investments on capabilities that directly enable specific business value (e.g., faster time-to-market, improved model accuracy).

- Build Cross-Functional Teams: Successful MLOps requires collaboration between data scientists, engineers, and business stakeholders—create team structures that facilitate this interaction.

- Leverage Managed Services: Platforms like AWS SageMaker, Databricks, and specialized MLOps tools can accelerate implementation for resource-constrained teams.

Conclusion

As we've demonstrated through our CoffeeBeans AI Readiness Continuum© and Data Source Mapping frameworks in previous articles, becoming AI-ready is a journey that requires strategic investments in foundational capabilities. MLOps represents a critical bridge between AI experimentation and sustainable business value—the key to transforming promising prototypes into production systems that deliver measurable ROI.

By implementing appropriate MLOps practices for your organization's size and AI maturity level, you can dramatically accelerate your journey from AI concepts to business impact. The key is starting with the right scope, focusing on business outcomes, and building capabilities incrementally rather than attempting a complete transformation overnight.